I read the HTML5Rocks article[1] and made some notes for myself. I also read parts of the spec[2]. I have yet to read this guide to performance[3].

An AudioContext is the entry point into everything. You use it to create sound sources, connect them to the sound destination (speakers), and play the sounds.

1 Simplest example#

It’s node based. The sound source is a node. You connect it to a sound destination like speakers. If you instead connect the sound source to a GainNode and then connect that to the speakers, you can control volume. A BiQuadFilterNode does low/high/band pass filters.

var context = new AudioContext(); function tone() { var osc440 = context.createOscillator() osc440.frequency.value = 440; osc440.connect(context.destination); osc440.start(); osc440.stop(context.currentTime + 2); }

2 Timed start/stop#

When playing a sound you can tell it what time to start (or stop). This way you can schedule several sounds in sequence and not have to hook into the timer for each one.

2.1. Serial#

function two_tones() { var osc440 = context.createOscillator(); osc440.frequency.value = 440; osc440.connect(context.destination); osc440.start(); osc440.stop(context.currentTime + 1 /* seconds */); var osc880 = context.createOscillator(); osc880.frequency.value = 880; osc880.connect(context.destination); osc880.start(context.currentTime + 1.1); osc880.stop(context.currentTime + 2.1); }

2.2. Parallel - telephone tones#

I was hoping to use the same oscillator and start/stop it, but it won’t start multiple times, so I created multiple oscillators. An alternative structure would be to hook both oscillators up to a gain node, and then use a custom piecewise curve to control gain between 0 and 1. Then to clean up, use a setTimeout to stop the oscillators at the very end.

function touch_tone(freqs, time_on, time_off) { var T = context.currentTime; var total_time = time_on + time_off; for (var i = 0; i < 4; i++) { var t = T + i * total_time; freqs.forEach(function(freq) { var osc = context.createOscillator(); osc.frequency.value = freq; osc.connect(context.destination); osc.start(t); osc.stop(t + time_on); }); } }

There are some other frequency combinations and timings listed here[4].

3 Parameter control#

Parameters like frequency are objects with a value field that you can read/write, and also functions to set that value programmatically[5], using linear or exponential curves, or a custom piecewise linear curve. I needed to use linearRampToValueAtTime twice, once to set the beginning point and once to set the end point:

function tone_rising() { var T = context.currentTime; var osc = context.createOscillator(); osc.type = "square"; osc.frequency.exponentialRampToValueAtTime(440, T); osc.frequency.exponentialRampToValueAtTime(880, T + 2); osc.connect(context.destination); osc.start(); osc.stop(T + 4); }

4 ADSR#

I used the linearRampToValueAtTime function to make ADSR (attack-decay-sustain-release) envelopes, which are just piecewise linear curves of volume levels:

function adsr(T, a, d, s, r, sustain) { var gain = context.createGain(); function set(v, t) { gain.gain.linearRampToValueAtTime(v, T + t); } set(0.0, -T); set(0.0, 0); set(1.0, a); set(sustain, a + d); set(sustain, a + d + s); set(0.0, a + d + s + r); return gain; } function note(freq, offset) { var T = context.currentTime; var env = {a: 0.1, d: 0.2, s: 0.4, r: 0.2, sustain: 0.2}; var osc = context.createOscillator(); osc.type = "sawtooth"; osc.frequency.value = freq; var gain = adsr(T + offset, env.a, env.d, env.s, env.r, env.sustain); osc.connect(gain); gain.connect(context.destination); osc.start(T + offset); osc.stop(T + offset + env.a + env.d + env.s + env.r + 3); } function knock(freq, offset) { var T = context.currentTime; var env = {a: 0.025, d: 0.025, s: 0.025, r: 0.025, sustain: 0.7}; var osc = context.createOscillator(); osc.frequency.value = freq; var gain = adsr(T + offset, env.a, env.d, env.s, env.r, env.sustain); osc.connect(gain); gain.connect(context.destination); osc.start(T + offset); osc.stop(T + offset + env.a + env.d + env.s + env.r + 3); } function tweet(freq, offset) { var T = context.currentTime; var gain = adsr(T + offset + 0.03, 0.01, 0.08, 0, 0, 0); var osc = context.createOscillator(); osc.frequency.value = freq; osc.frequency.setValueAtTime(freq, T + offset); osc.frequency.exponentialRampToValueAtTime(freq * 2, T + offset + 0.1); osc.connect(gain); gain.connect(context.destination); osc.start(); osc.stop(T + offset + 0.15); } function tweets() { for (var i = 0; i < 10; i++) { tweet(1000 * (1 + 2*Math.random()), i*0.2); } }

The Tweets example was supposed to be bubbles from this book[6] but it ended up sounding like tweets instead.

John L tells me that brass instruments have more frequency modulation during the 10-50ms attack and then it tapers off during the sustain. It’s not the simple model of only the gain of the waveform being shaped by the envelope.

5 Simpler envelope#

On this page[7] there’s a simpler envelope: use exponentialRampToValueAtTime to go to zero.

function cowbell() { var T = context.currentTime; var osc1 = context.createOscillator(); osc1.type = "square"; osc1.frequency.value = 800; var osc2 = context.createOscillator(); osc2.type = "square"; osc2.frequency.value = 540; var gain = context.createGain(); osc1.connect(gain); osc2.connect(gain); gain.gain.setValueAtTime(0.5, T); gain.gain.exponentialRampToValueAtTime(0.01, T + 1.0); var filter = context.createBiquadFilter(); filter.type = "bandpass"; filter.frequency.value = 800; gain.connect(filter); filter.connect(context.destination); osc1.start(T); osc2.start(T); osc1.stop(T + 1.1); osc2.stop(T + 1.1); }

6 Modulating parameters#

We can vary the volume (tremolo) or frequency (vibrato) by connecting an oscillator to another parameter.

6.1. Tremolo#

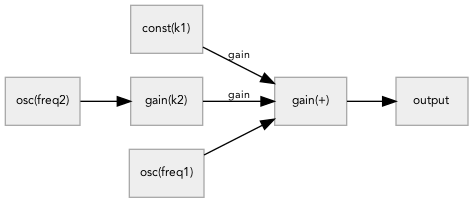

We want to vary the gain (volume) with an oscillator. Set the output to (k1 + k2*sin(freq2*t)) * sin(freq1). WebAudio doesn’t allow that directly but we can compose:

- Any value is the sum of the inputs. Set

gain.valuetok1and then connect the second part using.connect(). - The second part has to go through another gain for

k2. - The second part’s oscillator has frequency

freq.

So this would be

function tremolo() { var k1 = 0.3, k2 = 0.1; var T = context.currentTime; var osc2 = context.createOscillator(); osc2.frequency.value = 10; var osc1 = context.createOscillator(); osc1.frequency.value = 440; var gain2 = context.createGain(); osc2.connect(gain2.gain); gain2.gain.value = k2; var gain1 = context.createGain(); gain1.gain.value = k1; osc2.connect(gain1.gain); osc1.connect(gain1); gain1.connect(context.destination); osc1.start(T); osc2.start(T); osc1.stop(T + 3); osc2.stop(T + 3); }

6.2. Vibrato#

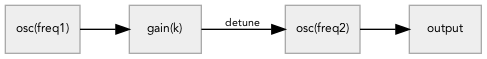

oscillator connected to pitch (detune parameter): sin(freq1 + k * sin(freq2))

function vibrato() { var T = context.currentTime; var osc1 = context.createOscillator(); osc1.frequency.value = 10; var osc2 = context.createOscillator(); osc2.frequency.value = 440; var gain = context.createGain(); gain.gain.value = 100.0; osc1.connect(gain); gain.connect(osc2.detune); osc2.connect(context.destination); osc1.start(T); osc2.start(T); osc1.stop(T + 3); osc2.stop(T + 3); }

7 Fill AudioBuffer directly#

An AudioBuffer is normally loaded by decoding a WAV/MP3/OGG file, but I can fill it directly:

function make_buffer(fill, env) { var count = context.sampleRate * 2; var buffer = context.createBuffer(1, count, context.sampleRate); var data = buffer.getChannelData(0 /* channel */); var state = {}; var prev_random = 0.0; for (var i = 0; i < count; i++) { var t = i / context.sampleRate; data[i] = fill(t, env, state); } var source = context.createBufferSource(); source.buffer = buffer; return source; } function fill_thump(t, env, state) { var frequency = 60; return Math.sin(frequency * Math.PI * 2 * Math.pow(t, env.s)); } function fill_snare(t, env, state) { var prev_random = state.prev_random || 0; var next_random = Math.random() * 2 - 1; var curr_random = (prev_random + next_random) / 2; prev_random = next_random; return Math.sin(120 * Math.pow(t, 0.05) * 2 * Math.PI) + 0.5 * curr_random; } function fill_hihat(t, env, state) { var prev_random = state.prev_random || 0; var next_random = Math.random() * 2 - 1; var curr = (3*next_random - prev_random) / 2; prev_random = next_random; return curr; } function drum(fill, env) { var source = make_buffer(fill, env); var gain = adsr(context.currentTime, env.a, env.d, env.s, env.r, env.sustain); source.connect(gain); gain.connect(context.destination); source.start(); }

The drum functions are from this code[8].

7.1. Wave shaper#

Instead of making a buffer for a given function, use createWaveShaper() and give it a shape. This would especially be useful for 0-1 phasors by using [0,1] as the shape. But it can also be useful for clipping a signal that exceeds the -1:+1 range by using [-1,+1] as the shape.

(No demo - just a note to myself)

8 Switch sounds#

function start_switch(offset, a, d, freq) { var T = context.currentTime + offset; var noise = make_buffer(fill_hihat, {a: a, d: d, s: 0, r: 0, sustain: 0}); var gain1 = adsr(T, a, d, 0, 0, 0); noise.connect(gain1); var filter1 = context.createBiquadFilter(); filter1.type = "bandpass"; filter1.frequency.value = freq; filter1.Q.value = 12; gain1.connect(filter1); var delay = context.createDelay(); delay.delayTime = 0.050; filter1.connect(delay); var filter2 = context.createBiquadFilter(); filter2.type = "bandpass"; filter2.frequency.value = 700; filter2.Q.value = 3; delay.connect(filter2); var gain2 = context.createGain(); gain2.gain = 0.01; filter2.connect(gain2); gain2.connect(delay); delay.connect(context.destination); noise.start(T); noise.stop(T + 0.1); } function multi_switch() { start_switch(0, 0.001, 0.020, 5000); start_switch(0.030, 0.001, 0.050, 3000); start_switch(0.140, 0.001, 0.020, 4000); start_switch(0.150, 0.001, 0.050, 7000); }

Why does this “ring”? I don’t understand. I’m following the Switches chapter of Designing Sound but don’t understand why my results are different from his.

9 Motor#

The Motors chapter of Designing Sound divides the motor into a rotor and stator.

var rotor_level = 0.5; function get_slider(id) { return document.getElementById(id).value; } function fill_one(t, env, state) { return 1.0; } function fill_phasor_power(t, env, state) { var phase = (t * env.freq) % 1.0; return Math.pow(phase, env.power); } function rotor() { var T = context.currentTime; var noise = make_buffer(fill_hihat, {}); var filter1 = context.createBiquadFilter(); filter1.type = "bandpass"; filter1.frequency.value = 4000; filter1.Q.value = 1; noise.connect(filter1); var gain1 = context.createGain(); gain1.gain.value = get_slider('brush-level'); filter1.connect(gain1); var constant = make_buffer(fill_one, {}); var gain2 = context.createGain(); gain2.gain.value = get_slider('rotor-level'); constant.connect(gain2); var gain3 = context.createGain(); gain3.gain.value = 0; gain1.connect(gain3); gain2.connect(gain3); var freq = get_slider('motor-drive'); var drive = make_buffer(fill_phasor_power, {power: 4, freq: freq}); drive.loop = true; drive.connect(gain3.gain); gain3.connect(context.destination); noise.start(T); drive.start(T); constant.start(T); noise.stop(T + 1); drive.stop(T + 1); constant.stop(T + 1); }

Drive:

Brush:

Rotor:

Set parameters then click

This procedurally generated sound was used in http://trackstar.glitch.me/intro[9]! (source[10])

10 Karplus-Strong algorithm#

See this explanation[11] and also this explanation[12].

There’s a WebAudio demo here[13] that uses hand-written asm.js (!) but it doesn’t work well when it’s in a background tab. There’s a much fancier demo here[14] using the same library (which isn’t open source licensed).

There’s another demo here[15] that uses very little code.

(I didn’t write my own demo)

11 Other#

Like PureData and SuperCollider and probably other DSP software, there are parameters that are set per audio sample (“a-rate” in WebAudio, “audio rate” in Pure Data) and lower frequency (“k-rate” in WebAudio, “control rate” in Pure Data). This probably shouldn’t be visible to me.

There’s a duration field on an audio buffer – not sure about this.

Other possibly useful nodes:

StereoPannerNode(2D)PannerNode(3D space, doppler effects, conical area, requires you to set the listener position)ConvolverNode(reverb) - convolution parameters generated by firing an “impulse” ping and then recording reflections. Impulse responses can be found on the internet.DelayNodeIIRFilterNode(more general than BiQuadFilterNode but less numerically stable?)OscillatorNodesine/square/triangle/sawtooth, or custom controlled byPeriodicWaveWaveShaperNode(“non-linear distortion effects”)ScriptProcessorNode(run your own js function to process each chunk of audio buffer – may be deprecated)DynamicsCompressorNode(raises volume of soft parts, lowers volume of loud parts, used in games)

WebAudio provides all this so that it can run it on a high priority audio thread separate from the main javascript execution thread. (Maybe this is why ScriptProcessorNode is deprecated)

Why do we need all this special processing for audio, which is under 200k/sec of data, but not for video, which can be gigabytes/sec of data? It’s about latency. (Thanks to this article[16] for making me think about this.) Video is played at 60 frames/second (16 milliseconds) but audio is played at 44,100 samples/second (22 microseconds), almost a factor of 1000 lower. Video needs high bandwidth; audio needs low latency. Operating systems run tasks at around 60-100 times per second. So audio work has to be done with buffers. You want to keep buffers small to be able to react quickly, but you want to keep buffers large to avoid running out of data.

11.1. More other#

- Google’s using AI to generate music[17].

- Try triangle wave — gain X10 — waveshaper

[-1,+1] - Try low band pass

<5000to cut out some sudden audio switch distortion - This procedural audio generator[18] makes “metal” music (but I think it’s using mp3/wav samples underneath)

- Musical Chord Progression Generator[19]

- Reverb[20] is something you can measure by emitting an “impulse” and then recording what reflects off of all the surfaces. You can then use convolution to apply this to all the sounds you generate.

- Downloadable versions of the Designing Sound examples[21]

My overall experience playing with WebAudio in 2016 was unpleasant. I’ve heard the Mozilla API[22] seemed nicer but I didn’t get a chance to use it. I thought maybe I was doing something wrong but others have had bad experiences too[23]. The whole system seems to have too many specific node types and not enough flexibility to make your own. By mid-2021 Audio Worklets became available across modern browsers, but they weren’t around when I wrote this page in 2016.